Python計(jì)算信息熵實(shí)例

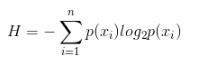

計(jì)算信息熵的公式:n是類別數(shù),p(xi)是第i類的概率

假設(shè)數(shù)據(jù)集有m行,即m個(gè)樣本,每一行最后一列為該樣本的標(biāo)簽,計(jì)算數(shù)據(jù)集信息熵的代碼如下:

from math import log def calcShannonEnt(dataSet): numEntries = len(dataSet) # 樣本數(shù) labelCounts = {} # 該數(shù)據(jù)集每個(gè)類別的頻數(shù) for featVec in dataSet: # 對(duì)每一行樣本 currentLabel = featVec[-1] # 該樣本的標(biāo)簽 if currentLabel not in labelCounts.keys(): labelCounts[currentLabel] = 0 labelCounts[currentLabel] += 1 shannonEnt = 0.0 for key in labelCounts: prob = float(labelCounts[key])/numEntries # 計(jì)算p(xi) shannonEnt -= prob * log(prob, 2) # log base 2 return shannonEnt

補(bǔ)充知識(shí):python 實(shí)現(xiàn)信息熵、條件熵、信息增益、基尼系數(shù)

我就廢話不多說(shuō)了,大家還是直接看代碼吧~

import pandas as pdimport numpy as npimport math## 計(jì)算信息熵def getEntropy(s): # 找到各個(gè)不同取值出現(xiàn)的次數(shù) if not isinstance(s, pd.core.series.Series): s = pd.Series(s) prt_ary = pd.groupby(s , by = s).count().values / float(len(s)) return -(np.log2(prt_ary) * prt_ary).sum()## 計(jì)算條件熵: 條件s1下s2的條件熵def getCondEntropy(s1 , s2): d = dict() for i in list(range(len(s1))): d[s1[i]] = d.get(s1[i] , []) + [s2[i]] return sum([getEntropy(d[k]) * len(d[k]) / float(len(s1)) for k in d])## 計(jì)算信息增益def getEntropyGain(s1, s2): return getEntropy(s2) - getCondEntropy(s1, s2)## 計(jì)算增益率def getEntropyGainRadio(s1, s2): return getEntropyGain(s1, s2) / getEntropy(s2)## 衡量離散值的相關(guān)性import mathdef getDiscreteCorr(s1, s2): return getEntropyGain(s1,s2) / math.sqrt(getEntropy(s1) * getEntropy(s2))# ######## 計(jì)算概率平方和def getProbSS(s): if not isinstance(s, pd.core.series.Series): s = pd.Series(s) prt_ary = pd.groupby(s, by = s).count().values / float(len(s)) return sum(prt_ary ** 2)######## 計(jì)算基尼系數(shù)def getGini(s1, s2): d = dict() for i in list(range(len(s1))): d[s1[i]] = d.get(s1[i] , []) + [s2[i]] return 1-sum([getProbSS(d[k]) * len(d[k]) / float(len(s1)) for k in d])## 對(duì)離散型變量計(jì)算相關(guān)系數(shù),并畫(huà)出熱力圖, 返回相關(guān)性矩陣def DiscreteCorr(C_data): ## 對(duì)離散型變量(C_data)進(jìn)行相關(guān)系數(shù)的計(jì)算 C_data_column_names = C_data.columns.tolist() ## 存儲(chǔ)C_data相關(guān)系數(shù)的矩陣 import numpy as np dp_corr_mat = np.zeros([len(C_data_column_names) , len(C_data_column_names)]) for i in range(len(C_data_column_names)): for j in range(len(C_data_column_names)): # 計(jì)算兩個(gè)屬性之間的相關(guān)系數(shù) temp_corr = getDiscreteCorr(C_data.iloc[:,i] , C_data.iloc[:,j]) dp_corr_mat[i][j] = temp_corr # 畫(huà)出相關(guān)系數(shù)圖 fig = plt.figure() fig.add_subplot(2,2,1) sns.heatmap(dp_corr_mat ,vmin= - 1, vmax= 1, cmap= sns.color_palette(’RdBu’ , n_colors= 128) , xticklabels= C_data_column_names , yticklabels= C_data_column_names) return pd.DataFrame(dp_corr_mat)if __name__ == '__main__': s1 = pd.Series([’X1’ , ’X1’ , ’X2’ , ’X2’ , ’X2’ , ’X2’]) s2 = pd.Series([’Y1’ , ’Y1’ , ’Y1’ , ’Y2’ , ’Y2’ , ’Y2’]) print(’CondEntropy:’,getCondEntropy(s1, s2)) print(’EntropyGain:’ , getEntropyGain(s1, s2)) print(’EntropyGainRadio’ , getEntropyGainRadio(s1 , s2)) print(’DiscreteCorr:’ , getDiscreteCorr(s1, s1)) print(’Gini’ , getGini(s1, s2))

以上這篇Python計(jì)算信息熵實(shí)例就是小編分享給大家的全部?jī)?nèi)容了,希望能給大家一個(gè)參考,也希望大家多多支持好吧啦網(wǎng)。

相關(guān)文章:

1. Python如何批量生成和調(diào)用變量2. 基于 Python 實(shí)踐感知器分類算法3. 通過(guò)CSS數(shù)學(xué)函數(shù)實(shí)現(xiàn)動(dòng)畫(huà)特效4. python利用opencv實(shí)現(xiàn)顏色檢測(cè)5. ASP.NET MVC實(shí)現(xiàn)橫向展示購(gòu)物車6. ASP.Net Core(C#)創(chuàng)建Web站點(diǎn)的實(shí)現(xiàn)7. windows服務(wù)器使用IIS時(shí)thinkphp搜索中文無(wú)效問(wèn)題8. ASP.Net Core對(duì)USB攝像頭進(jìn)行截圖9. Python 中如何使用 virtualenv 管理虛擬環(huán)境10. ajax動(dòng)態(tài)加載json數(shù)據(jù)并詳細(xì)解析

網(wǎng)公網(wǎng)安備

網(wǎng)公網(wǎng)安備